Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

The breakthrough came months later, when Buhrman was visiting his frequent collaborator Richard Cleve at the University of Waterloo. They decided to focus on an extreme scenario, one where the full memory is very large. If a computer with little free memory can access this massive full memory, would that enable it to solve problems that would be impossible with the free memory alone? It’s like the “hard drive full of family photos” question, but with a hard drive the size of a data center.

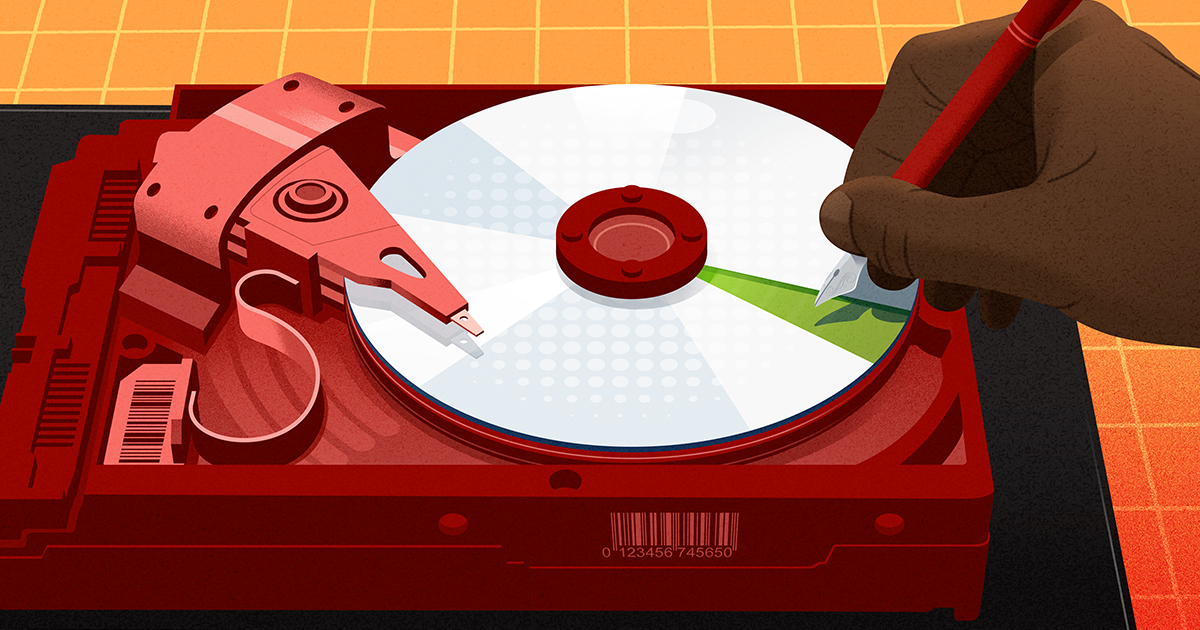

If that extra data is untouchable — you can’t interact with it at all — then it definitely doesn’t help. But what if you’re allowed to tweak some of the bits encoding this data, as long as you promise to reset them when you’re done? You can’t simply keep a record of your changes, since that would take up even more space, so instead you’ll have to ensure that your changes are easily reversible. What’s more, you don’t get to choose the content of the extra data, so whatever you do must work for any possible initial configuration of bits.

Those are pretty stringent constraints, so it wasn’t obvious that the extra memory could ever prove useful. But to their surprise, Buhrman and Cleve showed that, if you tweak bits in just the right way, you really can get extra computational oomph out of a full memory.

“That was a shocker for everyone,” said Loff, who was a graduate student in Buhrman’s group at the time, working on the memory question with his fellow student Florian Speelman. The team soon extended the result to an even larger class of problems, and published their combined results in 2014.

They named the new framework catalytic computing, borrowing a term from chemistry. “Without the catalyst, the reaction would not have proceeded,” said Raghunath Tewari, a complexity theorist at the Indian Institute of Technology, Kanpur. “But the catalyst itself remains unchanged.”

A small band of researchers continued to develop catalytic computing further, but no one even tried to apply it to the tree evaluation problem that had initially inspired Koucký’s quest. For that problem, the remaining open question was whether a small amount of memory could be used for storage and computation simultaneously. But the techniques of catalytic computing relied on the extra, full memory being very large. Shrink that memory, and the techniques no longer work.

Still, one young researcher couldn’t help wondering whether there was a way to adapt those techniques to reuse memory in a tree evaluation algorithm. His name was James Cook, and for him the tree evaluation problem was personal: Stephen Cook, the legendary complexity theorist who invented it, is his father. James had even worked on it in graduate school, though he mostly focused on completely unrelated subjects. By the time he encountered the original catalytic computing paper in 2014, James was about to graduate and leave academia for software engineering. But even as he settled into his new job, he kept thinking about catalytic computing.

“I had to understand it and see what could be done,” he said.

For years, James Cook tinkered with a catalytic approach to the tree evaluation problem in his spare time. He gave a talk about his progress at a 2019 symposium in honor of his father’s groundbreaking work in complexity theory. After the talk, he was approached by a graduate student named Ian Mertz, who’d fallen in love with catalytic computing five years earlier after learning about it as an impressionable young undergrad.

“It was like a baby bird imprinting scenario,” Mertz said.